Speech and Voice Recognition Market Size, Share & Trends

Speech and Voice Recognition Market by Technology (Speaker Identification, Speaker Verification, Automatic Speech), Application (Voice Search, Voice Command, Real Time Transcription, Voice Biometrics, Customer Service), Mode - Global Forecast to 2030

OVERVIEW

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

The global Speech and Voice Recognition Market size was estimated at USD 8.49 billion in 2024 and is predicted to increase from USD USD 9.66 billion in 2025 to approximately USD 23.11 billion by 2030, expanding at a CAGR of 19.1% from 2025 to 2030.The speech and voice recognition market is expected to grow rapidly over the next decade, driven by significant advancements in artificial intelligence, deep learning, and natural language processing. There is an increasing demand for voice-enabled and hands-free technologies in smartphones, smart homes, and vehicles, which is fueling this growth. The rise of virtual assistants and voice-based customer service is also contributing to the expansion of the market. Additionally, industries such as healthcare and banking are integrating voice technologies to enhance efficiency and improve the user experience.

KEY TAKEAWAYS

-

BY TECHNOLOGYThe technologies include automated speech recognition (ASR), which is critical in converting speech to text and speaker voice recognition (SVR) toidentify or verify users.

-

BY DEPLOYMENT MODECloud-based deployment is growing in speech and voice recognition due to its ability to handle large-scale voice data, support real-time processing, enable faster updates to AI models, and reduce the need for local computing resources.

-

BY APPLICATIONVoice search applications lead the market due to their widespread use in smartphones and smart devices, faster query input compared to typing, and growing integration with AI-powered virtual assistants such as Siri, Alexa, and Google Assistant.

-

BY VERTICALIn speech and voice recognition, consumer electronics lead the market due to the integration of voice-enabled features in smartphones, smart speakers, TVs, and wearables, driving demand for seamless and hands-free interaction.

-

BY REGIONAsia Pacific is expected to be the fastest-growing region, with a CAGR of 20.4% due to the rising adoption of smartphones, increasing internet penetration, rapid digital transformation, and strong government initiatives supporting AI and smart technologies in countries such as China, India, and Japan.

-

COMPETITIVE LANDSCAPEThe major market players have adopted organic growth strategies, such as product launches. For instance, Microsoft, IBM, Alphabet, and Amazon.com Inc. have developed new features or launched products.

The speech and voice recognition market is projected to grow rapidly over the next decade due to significant advancements in artificial intelligence, deep learning, and natural language processing. The demand for technology is driven by its riging use in voice-enabled and hands-free devices that find applications in smartphones and smart homes. The rise of virtual assistants and voice-based customer service drives market growth. Additionally, industries such as healthcare and banking are integrating voice technologies for improved efficiency and user experience.

TRENDS & DISRUPTIONS IMPACTING CUSTOMERS' CUSTOMERS

The impact on consumers' business emerges from customer trends or disruptions. Hot belts are the clients of speech and voice recognition technology providers, and target applications are the clients of speech and voice recognition providers. Shifts, which are changing trends or disruptions, will impact the revenues of end users. The revenue impact on end users will affect the revenue of hotbeds, which will further affect the revenues of speech and voice recognition providers.

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

MARKET DYNAMICS

Level

-

Extensive use of speech and voice recognition in smart appliances

-

Rising need for real-time transcription in virtual meetings and industrial settings

Level

-

Concerns regarding data privacy and security

-

Multilingual and intent recognition challenges of voice assistants

Level

-

Integration of speech and voice recognition technology with mobile applications

-

Rising deployment of speech and voice technology in autonomous vehicles

Level

-

Limited awareness regarding availability and benefits of voice assistant technologies

-

Lack of standardized platform for developing customized products

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

Driver: Extensive use of speech and voice recognition in smart appliances

Widespread use of speech and voice recognition in smart appliances transforms how users interact with household devices, allowing hands-free control, personalization through learned voice profiles, and seamless integration with virtual assistants such as Alexa, Siri, and Google Assistant. This trend is driven by advancements in natural language processing (NLP), far-field voice recognition, and edge AI, which enhance functionality and accessibility, especially for the elderly and assisted-living users. As a result, voice-enabled appliances improve energy efficiency, user convenience, and safety. Consequently, manufacturers are reshaping product strategies to focus on voice interface technologies as a key differentiator in the global smart appliance market.

Restraint: Concerns regarding data privacy and security

Data privacy and security concerns remain a significant barrier to the broader adoption of speech and voice recognition technologies, as these systems process sensitive personal information. Users fear unauthorized access, data misuse, and surveillance, mainly with cloud-based models that involve third-party data transfers. Stringent regulations including the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) complicate implementation, particularly for global companies. While technologies such as on-device processing and federated learning offer potential solutions, the lack of trust in data protection remains a key factor until stronger privacy safeguards are fully implemented.

Opportunity: Rising deployment of speech and voice technology in autonomous vehicles

The growing use of speech and voice recognition in autonomous vehicles presents a key market opportunity, enabling hands-free control of navigation, calls, climate, and diagnostics for safer and more comfortable travel. Advanced NLP allows natural conversations, while AI assistants personalize the in-vehicle experience by learning user preferences. Automakers are investing in connected ecosystems where voice complements touch and gesture controls. Voice biometrics further enhances security through driver identification. With advancements in 5G, edge computing, and vehicle sensors, voice technologies enable a responsive and intelligent driving environment.

Challenge: Limited awareness regarding the availability and benefits of voice assistant technologies

Limited consumer awareness remains a major barrier to the widespread adoption of voice assistant technology, as many users are unaware of its full capabilities beyond basic commands. This gap is more pronounced in rural or underserved areas due to lack of digital infrastructure, language support, or perceived setup complexity. Valuable features such as voice-controlled smart home devices, secure authentication, and accessibility tools often go unused. Bridging this gap requires educational efforts from tech providers, the use of local languages, and integration into everyday services. Marketing, demonstrations, and personalized use cases can help drive adoption and user trustt.

Speech and Voice Recognition Market: COMMERCIAL USE CASES ACROSS INDUSTRIES

| COMPANY | USE CASE DESCRIPTION | BENEFITS |

|---|---|---|

|

Azure AI Speech offers real-time speech-to-text, text-to-speech, and speech translation services | Enables high-accuracy voice-enabled applications | Supports multilingual transcription | Enhances accessibility |

|

IBM Watson Speech-to-Text for transcription and speech analytics in customer service | Fast, accurate transcription in multiple languages | Improves self-service and agent support efficiency |

|

Google Cloud Speech-to-Text and Voice Search used in Android and Google services | Real-time and batch transcription with high accuracy | Enables hands-free Google Search and voice commands |

|

Alexa voice assistant supports music, tasks, smart home control, and real-time information | Voice search and command features for personalized user experience and smart ecosystem integration |

|

Speech-to-Text and Speaker Recognition technologies integrated in Apple devices | Accurate domain-specific transcription | Secure speaker identification across iPhones, Siri, and other devices |

Logos and trademarks shown above are the property of their respective owners. Their use here is for informational and illustrative purposes only.

MARKET ECOSYSTEM

The speech and voice recognition ecosystem comprises a network of interconnected stakeholders, including hardware manufacturers, software providers, system integrators, and end users. Hardware providers deliver the physical devices and components that enable voice-based technologies. Their contributions range from capturing audio signals to optimizing edge and cloud computing. Software providers develop core technologies such as speech-to-text, voice biometrics, and natural language processing (NLP) engines that power voice-enabled applications. System integrators play a key role in embedding these technologies into various platforms, such as mobile devices, enterprise solutions, automotive systems, and smart home devices, ensuring seamless functionality and compatibility. End users, including businesses and consumers, interact with these integrated solutions for applications such as virtual assistants, voice search, customer service automation, and accessibility tools, driving adoption and continuous innovation across the ecosystem.

Logos and trademarks shown above are the property of their respective owners. Their use here is for informational and illustrative purposes only.

MARKET SEGMENTS

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

Speech and Voice Recognition Market, by Technology

Automatic Speech Recognition (ASR) is leading the speech and voice recognition industry because it enables real-time, accurate conversion of spoken language into text, making voice interaction with devices more natural and efficient. ASR powers a wide range of applications, from virtual assistants and transcription services to customer support automation, improving accessibility and user experience. Its ability to support multiple languages and dialects, along with advancements in AI and machine learning, enhances accuracy and adaptability, driving broad adoption across industries such as healthcare, automotive, and consumer electronics.

Speech and Voice Recognition Market, by Deployment Mode

The cloud deployment model is increasingly adopted in speech and voice recognition because it offers scalable, cost-effective, and flexible access to powerful AI and processing resources without heavy upfront investments. Cloud-based solutions enable real-time updates, easy integration, and cross-device synchronization, allowing developers to deploy and improve voice applications quickly. Additionally, cloud platforms support large-scale data processing and storage, which are essential for training advanced models and handling diverse voice inputs.

Speech and Voice Recognition Market, by Application

Voice search is leading the speech and voice recognition market because it offers a faster, more convenient, and hands-free way for users to access information and perform tasks. With the rise of smart devices such as smartphones, smart speakers, and wearables, consumers increasingly prefer speaking over typing for searches, making interactions more natural and accessible. Voice search also supports multilingual and context-aware queries, improving user experience. Its integration with popular platforms such as Google Assistant, Siri, and Alexa drives widespread adoption, making it a dominant application that fuels market growth.

Speech and Voice Recognition Market, by Vertical

The consumer electronics vertical is expected to witness the highest growth rate in the speech and voice recognition market because of the rapid adoption of smart devices, such as smartphones, smart speakers, wearables, and TVs, which increasingly rely on voice interfaces for seamless, hands-free user experiences. Continuous innovation and integration of voice assistants such as Alexa, Siri, and Google Assistant enhance device functionality and convenience. Additionally, rising consumer demand for personalized, intuitive, and accessible technology drives manufacturers to embed voice recognition features across a wide range of products, accelerating market growth in this sector.

REGION

Asia Pacific is expected to be fastest-growing region in the global speech and voice recognition market during the forecast period

Asia Pacific is expected to witness the fastest growth in the speech and voice recognition market due to rapid digital transformation, increasing smartphone penetration, and expanding internet access across countries such as China, India, and Japan. Government initiatives promoting AI and smart technologies, combined with a large, tech-savvy population, fuel demand for voice-enabled applications. Additionally, rising investments from local and global companies to develop region-specific languages and dialect support help drive adoption, making Asia Pacific a key growth hub in the market

Speech and Voice Recognition Market: COMPANY EVALUATION MATRIX

In speech and voice recognition companies , Microsoft (Star) leads with a strong presence and diverse product portfolio, driving widespread adoption across industries such as healthcare, automotive, and customer service, while continuously innovating to maintain its dominance. Baidu (Emerging Leader) is gaining traction with its AI-powered voice recognition technologies and expanding footprint in the Asia Pacific region, focusing on natural language processing and regional language support, which positions it well for strong growth and advancement toward the leaders' quadrant.

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

KEY MARKET PLAYERS

MARKET SCOPE

| REPORT METRIC | DETAILS |

|---|---|

| Market Size in 2024 (Value) | USD 8.50 Billion |

| Market Forecast in 2030 (Value) | USD 23.11 Billion |

| Growth Rate | CAGR of 19.1% from 2025-2030 |

| Years Considered | 2021–2030 |

| Base Year | 2024 |

| Forecast Period | 2025–2030 |

| Units Considered | Value (USD Million) |

| Report Coverage | Revenue Forecast, Company Ranking, Competitive Landscape, Growth Factors, and Trends |

| Segments Covered |

|

| Regional Scope | North America, Asia Pacific, Europe, the Middle East & Africa, and South America |

WHAT IS IN IT FOR YOU: Speech and Voice Recognition Market REPORT CONTENT GUIDE

DELIVERED CUSTOMIZATIONS

We have successfully delivered the following deep-dive customizations:

| CLIENT REQUEST | CUSTOMIZATION DELIVERED | VALUE ADDS |

|---|---|---|

| Speech Recognition Software Provider |

|

|

| Cloud-based Speech Solution Provider |

|

|

| Consumer Electronics OEM |

|

|

| Automotive Software Provider |

|

|

RECENT DEVELOPMENTS

- June 2025 : Google (US) launched Search Live, an experimental feature that allows users to interact with Search using their voice. Available through the Google app on both Android and iOS, it's part of the new AI Mode that is now accessible to all users in the US.

- April 2025 : Voicegain (US) introduced Voicegain Casey, an AI Voice Agent designed for payers, leveraging generative AI to revolutionize the entire call center experience.

- March 2025 : Microsoft (US) launched a new AI assistant for healthcare professionals, designed as an all-in-one solution that integrates voice dictation, ambient listening, and generative AI capabilities.

- December 2024 : Amazon (US) announced the general availability of its new multilingual streaming speech recognition models (ASR-2.0) in Amazon Lex. These models improve recognition accuracy through two region-specific groups: a European model supporting Portuguese, Catalan, French, Italian, German, and Spanish, and an Asia Pacific model supporting Chinese, Korean, and Japanese.

- March 2024 : Apple (US) introduced transcripts for Apple Podcasts, a new feature that enhances accessibility and makes it easier to navigate episodes. With transcripts, users can view the entire episode text, search for specific words or phrases, and tap any part of the transcript to start playback from that exact moment.

Table of Contents

Methodology

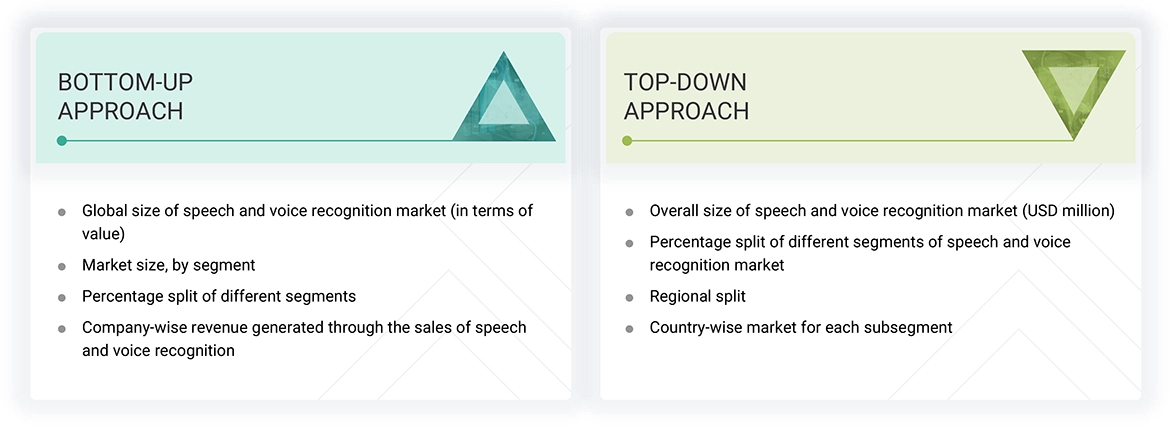

The study used four major activities to estimate the speech and voice recognition market size. Exhaustive secondary research was conducted to gather information on the market and its peer and parent markets. The next step was to validate these findings, assumptions, and market size with industry experts across the value chain through primary research. Both top-down and bottom-up approaches were employed to estimate the total market size. Finally, market breakdown and data triangulation methods were used to estimate the market size for different segments and subsegments.

Secondary Research

In the secondary research process, various sources were used to identify and collect information on the speech and voice recognition market. Secondary sources for this research study include corporate filings (such as annual reports, investor presentations, and financial statements); trade, business, and professional associations; white papers; certified publications; and articles by recognized authors, directories, and databases. The secondary data was collected and analyzed to determine the overall market size, and was further validated through primary research.

List of key secondary sources

|

Source |

Web Link |

|

Ministry of Electronics & Information Technology |

https://www.meity.gov.in/esdm/standards |

|

Institute of Electrical & Electronics Engineering |

https://www.ieee.org/ |

|

Semiconductor Industry Association (SIA) |

https://www.semiconductors.org/ |

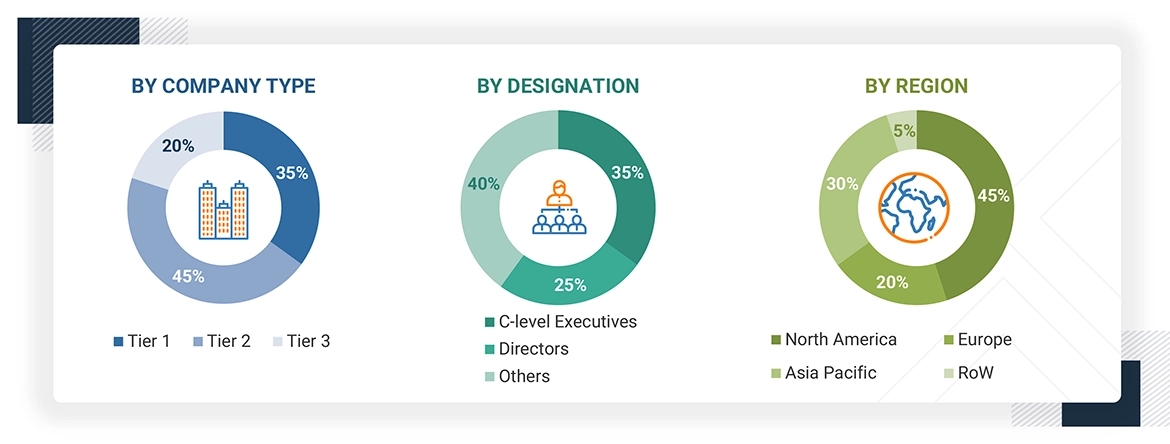

Primary Research

Primary interviews were conducted to gather insights on market statistics, revenue data, market breakdowns, size estimations, and forecasting. Additionally, primary research was used to comprehend various technologies, types, applications, and regional trends. Interviews with stakeholders from the demand side, including CIOs, CTOs, CSOs, and customer/end user installation teams using speech and voice recognition offerings and processes, were also conducted to understand their perspective on suppliers, products, component providers, and their current and future use of speech and voice recognitions, which will impact the overall market. Several primary interviews were conducted across major countries in North America, Europe, the Asia Pacific, and RoW.

To know about the assumptions considered for the study, download the pdf brochure

Market Size Estimation

In the complete market engineering process, top-down and bottom-up approaches and several data triangulation methods have been used to estimate and forecast the overall market segments and subsegments listed in this report. Key players in the market have been identified through secondary research, and their market shares in the respective regions have been determined through primary and secondary research. This entire procedure includes the study of annual and financial reports of the top market players and extensive interviews for key insights (quantitative and qualitative) with industry experts (CEOs, VPs, directors, and marketing executives).

All percentage shares, splits, and breakdowns have been determined using secondary sources and verified through primary sources. All the parameters affecting the markets covered in this research study have been accounted for, viewed in detail, verified through primary research, and analyzed to obtain the final quantitative and qualitative data. This data has been consolidated and supplemented with detailed inputs and analysis from MarketsandMarkets and presented in this report. The following figure represents this study's overall market size estimation process.

Speech and Voice Recognition Market : Top-Down and Bottom-Up Approach

Data Triangulation

Once the overall size of the speech and voice recognition market has been determined using the methods described above, it has been divided into multiple segments and subsegments. Market engineering has been performed for each segment and subsegment using market breakdown and data triangulation methods, as applicable, to obtain accurate statistics. Various factors and trends from the demand and supply sides have been studied to triangulate the data. The market size has been validated using both top-down and bottom-up approaches.

Market Definition

Speech recognition is the ability of a machine or program to identify words and phrases in spoken language and convert them to a machine-readable format. In contrast, voice recognition aims to identify the person who is speaking by catching the unique dialect among individuals. Speech and voice recognition is the technology by which sounds, words, or phrases spoken by human beings are converted into electrical signals. These signals are then transformed into coding patterns. Speech and voice recognition software solutions are used for dictation, system control, commercial and industrial applications, voice dialing, and other purposes. Many speech and voice recognition applications and devices are available, but the more advanced solutions rely on artificial intelligence and machine learning. They combine grammar, syntax, structure, and composition of audio and voice signals to interpret and analyze human speech.

Key Stakeholders

- Banking, financial services, and insurance players

- Speech and voice recognition application developers/traders/suppliers

- Speech and voice recognition patent assignees

- Smart home and home automation product manufacturers

- Autonomous car developers and manufacturers

- Research institutes and organizations

- Virtual assistant product manufacturers

- Consumer electronics manufacturers

- Healthcare service providers

- Government agencies

- Speech and voice recognition application program interface (API) providers

- Market research and consulting firms

Report Objectives

- To describe and forecast the speech and voice recognition market, by technology, deployment mode, application, vertical, and region, in terms of value

- To describe and forecast the market for various segments across four main regions, namely, North America, Europe, Asia Pacific, and RoW, in terms of value

- To strategically analyze micromarkets with regard to individual growth trends, prospects, and contributions to the markets

- To provide detailed information regarding drivers, restraints, opportunities, and challenges influencing market growth

- To analyze opportunities for stakeholders by identifying high-growth segments in the market

- To provide a detailed overview of the speech and voice recognition value chain

- To strategically analyze key technologies, average selling price trends, trends impacting customer business, ecosystem, regulatory landscape, patent landscape, Porter's five forces, import and export scenarios, trade landscape, key stakeholders, buying criteria, and case studies pertaining to the market under study

- To strategically profile key players in the speech and voice recognition market and comprehensively analyze their market share and core competencies

- To analyze competitive developments such as partnerships, acquisitions, expansions, collaborations, and product launches, along with R&D in the speech and voice recognition market

Available customizations:

With the given market data, MarketsandMarkets offers customizations according to the specific requirements of companies. The following customization options are available for the report:

Regional Analysis:

- Detailed analysis and profiling of additional market players (up to 5)

- Additional country-level analysis of the speech and voice recognition market

Product Analysis

- The product matrix provides a detailed comparison of the product portfolio of each company in the speech and voice recognition market.

Key Questions Addressed by the Report

Which companies dominate the speech and voice recognition market, and what strategies do they employ to enhance their market presence?

The major companies in the speech and voice recognition market include Microsoft (US), IBM (US), Alphabet (US), Amazon (US), and Apple Inc (US). These players adopt significant strategies, including product launches and developments, collaborations, acquisitions, and expansions.

Which region has the highest speech and voice recognition market potential?

The Asia Pacific market is projected to grow at the highest CAGR during the forecast period.

What are the opportunities for new market entrants?

Customer preference for cloud-based speech-to-text software, increasing popularity of online shopping, and development of personalized applications for users will offer potential growth opportunities in this market.

What are the key factors driving the speech and voice recognition market?

Market drivers include escalated use of speech and voice recognition software by healthcare professionals, extensive penetration of speech and voice recognition technologies in smart appliances, and increasing demand for speech and voice recognition technologies for transcription.

What key speech and voice recognition applications will drive market growth in the next five years?

The significant consumers of speech and voice recognition are consumer electronics, healthcare, and government sectors.

Need a Tailored Report?

Customize this report to your needs

Get 10% FREE Customization

Customize This ReportPersonalize This Research

- Triangulate with your Own Data

- Get Data as per your Format and Definition

- Gain a Deeper Dive on a Specific Application, Geography, Customer or Competitor

- Any level of Personalization

Let Us Help You

- What are the Known and Unknown Adjacencies Impacting the Speech and Voice Recognition Market

- What will your New Revenue Sources be?

- Who will be your Top Customer; what will make them switch?

- Defend your Market Share or Win Competitors

- Get a Scorecard for Target Partners

Custom Market Research Services

We Will Customise The Research For You, In Case The Report Listed Above Does Not Meet With Your Requirements

Get 10% Free CustomisationGrowth opportunities and latent adjacency in Speech and Voice Recognition Market

Jorge

Jun, 2022

We require such analysis which can exclusively help our organization with revenue growth. Are there specific insights in this domain which you could share?.