AI Inference Market Size, Share & Trends, 2025 To 2030

AI Inference Market by Compute (GPU, CPU, FPGA), Memory (DDR, HBM), Network (NIC/Network Adapters, Interconnect), Deployment (On-premises, Cloud, Edge), Application (Generative AI, Machine Learning, NLP, Computer Vision) - Global Forecast to 2030

OVERVIEW

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

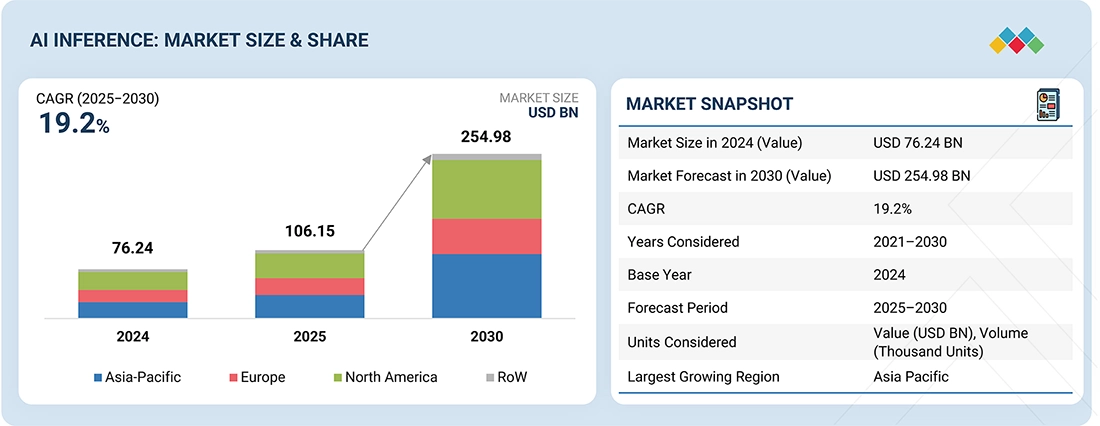

The AI inference market is expected to grow from USD 106.15 billion in 2025 to USD 254.98 billion by 2030, with a CAGR of 19.2% from 2025 to 2030. The market is expanding rapidly, driven by advances in generative AI (GenAI) and large language models (LLMs). Demand for AI inference will increase as enterprises focus on real-time GenAI deployment and hyperscalers expand infrastructure to support compute-heavy, data-driven decision-making worldwide, thereby boosting market growth.

KEY TAKEAWAYS

-

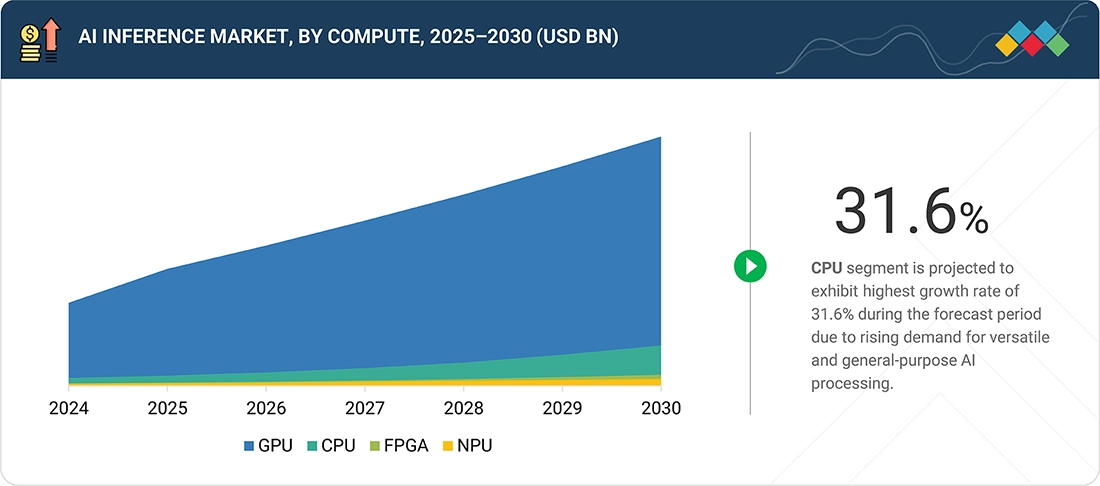

BY COMPUTEThe AI inference market by compute includes GPU, CPU, FPGA, TPU, FSD, Inferentia, T-head, LPU, and other ASICs. The GPU segment is expected to dominate due to its superior parallel processing power and widespread adoption across data centers for large model inference workloads.

-

BY MEMORYThis segment includes DDR and HBM memory technologies. HBM (high bandwidth memory) is gaining momentum, driven by the need for high-speed data transfer and energy efficiency in AI accelerators handling large model inference.

-

BY NETWORKThe network segment includes NICs/network adapters, infiniBand, ethernet, and interconnects. NIC/network adapters are projected to grow the fastest, driven by increasing demand for low-latency communication between AI servers and distributed inference nodes.

-

BY DEPLOYMENTThe AI inference market includes on-premises, cloud, and edge deployments. Cloud-based deployment has the largest market share, driven by scalability, cost savings, and the quick adoption of inference-as-a-service platforms by businesses.

-

BY APPLICATIONThe application segment includes generative AI, rule-based models, statistical models, deep learning, GANs, autoencoders, CNNs, transformer models, machine learning, NLP, and computer vision. Generative AI is projected to grow at the highest CAGR, fueled by expanding adoption of large language and diffusion models.

-

BY END USERThis segment includes consumers, cloud service providers (CSPs), enterprises, healthcare, BFSI, automotive, retail & e-commerce, media & entertainment, government, and others. Cloud service providers hold the largest share due to extensive AI infrastructure deployments and hyperscaler-led inference workloads.

-

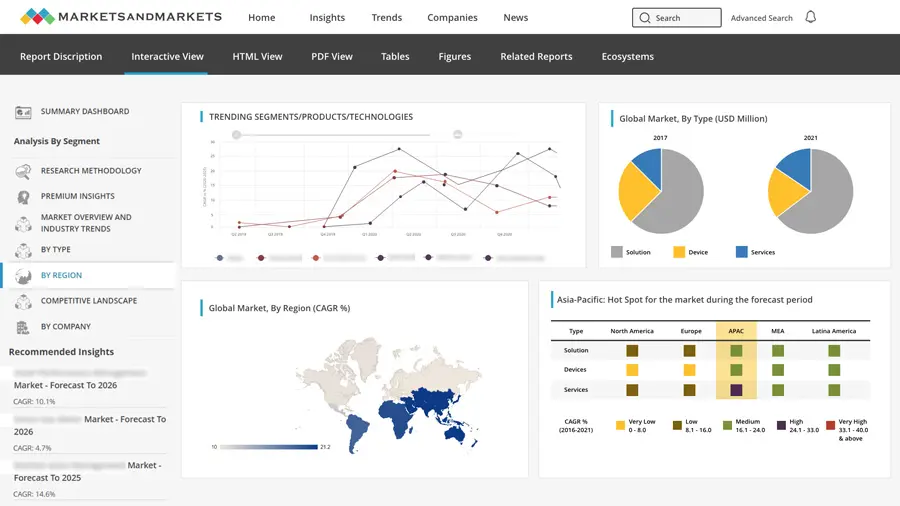

BY REGIONAsia Pacific is expected to grow at the fastest rate, driven by investments in sovereign AI initiatives, hyperscale data centers, and semiconductor ecosystem expansion across China, India, and Japan.

-

COMPETITIVE LANDSCAPEMajor market players have adopted both organic and inorganic strategies, including partnerships and agreements. Key players such as NVIDIA Corporation, Intel Corporation, Advanced Micro Devices, Inc. are driving innovation in GPU, CPU, and FPGAs, focusing on enhancing application-specific solutions to cater to cloud service providers and enterprise end users.

The AI inference market is mainly driven by the fast adoption of generative AI models and large-scale language architectures that require high-performance, low-latency computing infrastructure. Growing enterprise focus on real-time decisions, automation, and cost-effective AI deployment is boosting demand for specialized inference hardware and software tuning. Furthermore, increasing investments by hyperscalers and semiconductor companies in edge and cloud inference platforms are further fueling market growth.

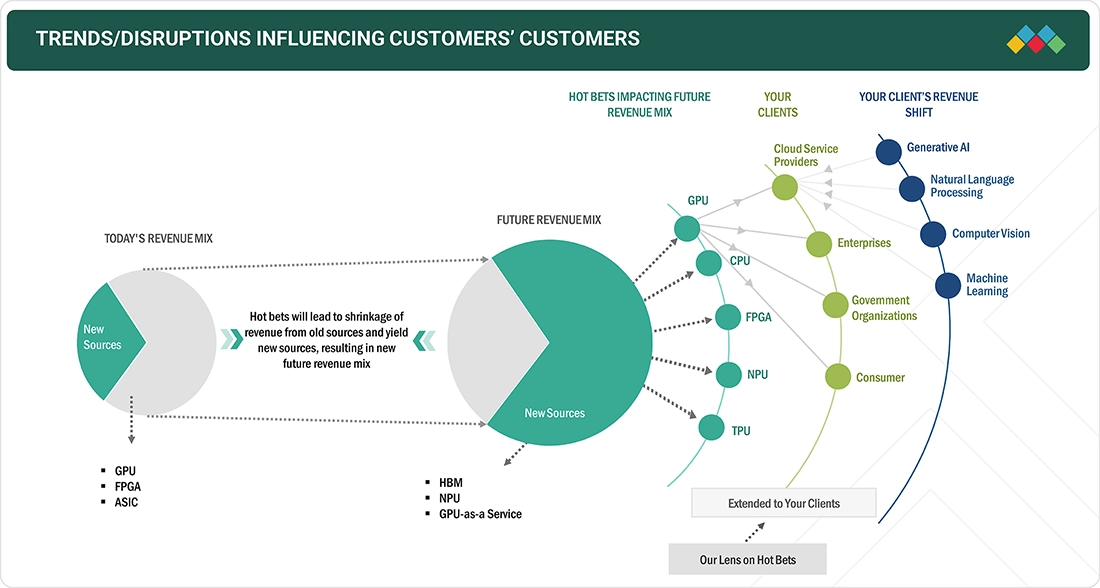

TRENDS & DISRUPTIONS IMPACTING CUSTOMERS' CUSTOMERS

Several trends and disruptions are reshaping the AI inference landscape and directly impacting customer business models, revenue streams, and technology adoption strategies. The current revenue mix primarily includes traditional components such as GPU, FPGA, and ASIC. As AI continues to permeate various sectors, there is a growing demand for HBM, NPU, and GPU-as-a-Service, which are better suited for handling the intensive computational requirements of AI workloads. The changing revenue mix is directly linked to customer needs, particularly in AI-driven domains such as generative AI, natural language processing, computer vision, and machine learning. As customers transition towards AI-powered solutions. enterprises and cloud providers are extending their investments in advanced inference hardware to support these workloads efficiently.

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

MARKET DYNAMICS

Level

-

Growing demand for real-time processing on edge devices

-

Growth of advanced cloud platforms offering specialized AI inference services

Level

-

Computational workload and high-power consumption

-

Shortage of skilled workforce

Level

-

Growth of AI-enabled healthcare and diagnostics

-

Advancements in natural language processing for improved customer experience

Level

-

Data privacy concerns

-

Supply chain disruptions

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

Driver: Growing demand for real-time processing on edge devices

The demand for AI inference at the edge is rapidly growing due to the need for low-latency processing in applications like autonomous vehicles, smart devices, industrial IoT, and healthcare. Edge computing is considered essential to decrease latency, bandwidth consumption, and dependence on cloud systems. Over time, this will become a major factor driving the market.

Restraint: Computational workload and high-power consumption

High computational demands and power consumption remain key challenges, especially for deploying AI inference in power-sensitive devices like mobile phones and edge devices. These limitations can increase costs and hinder the adoption of AI in resource-limited environments, making it a significant obstacle in the near future.

Opportunity: Growth of AI-enabled healthcare and diagnostics

Healthcare's adoption of AI for diagnostics, imaging, and personalized medicine will increase demand for inference technologies that can process data accurately and efficiently. Early implementations will focus on enhancing diagnostics and improving patient outcomes, with AI solutions beginning to gain regulatory approval and support.

Challenge: Data privacy concerns

Data privacy concerns will greatly influence the AI inference market as regulations like GDPR and CCPA enforce stricter data handling rules. Many organizations face difficulties in adopting privacy-preserving inference methods, which limits AI's use in sensitive fields like healthcare and finance.

AI Inference Market: COMMERCIAL USE CASES ACROSS INDUSTRIES

| COMPANY | USE CASE DESCRIPTION | BENEFITS |

|---|---|---|

|

AI-powered radiation therapy optimization with Intel Corporation and Siemens Healthineers | The implementation achieved a 35x speedup in AI inference time compared to the 3rd Gen Intel Xeon Scalable processors. Contouring a typical abdominal scan with nine structures took only 200 milliseconds, freeing up CPU resources, increasing efficiency, and lowering energy consumption. |

|

Artificial Intelligence accelerates dark matter search with Advanced Micro Devices, Inc. FPGAs | It achieved 100 ns AI inference latency with improved trigger identification for dark matter detection. Accelerating algorithm development from months to a day enabled widespread adoption of AI inference in physics experiments. |

|

Serving inference for LLMs - NVIDIA Triton inference server and Eleuther AI | The collaboration greatly decreased latency by up to 40% for Eleuther AI’s models, enhancing overall user experience. The combination of Triton Inference Server and CoreWeave’s infrastructure offered a high-performance, cost-effective solution that scales efficiently with demand, ultimately helping Eleuther AI stay competitive in the rapidly changing AI landscape. |

|

Finch computing reduces inference costs using AWS Inferentia for language translation | Finch managed to cut inference costs by over 80%, add support for three more languages, accelerate the time to market for new models, and attract new customers while keeping throughput and response times stable. |

Logos and trademarks shown above are the property of their respective owners. Their use here is for informational and illustrative purposes only.

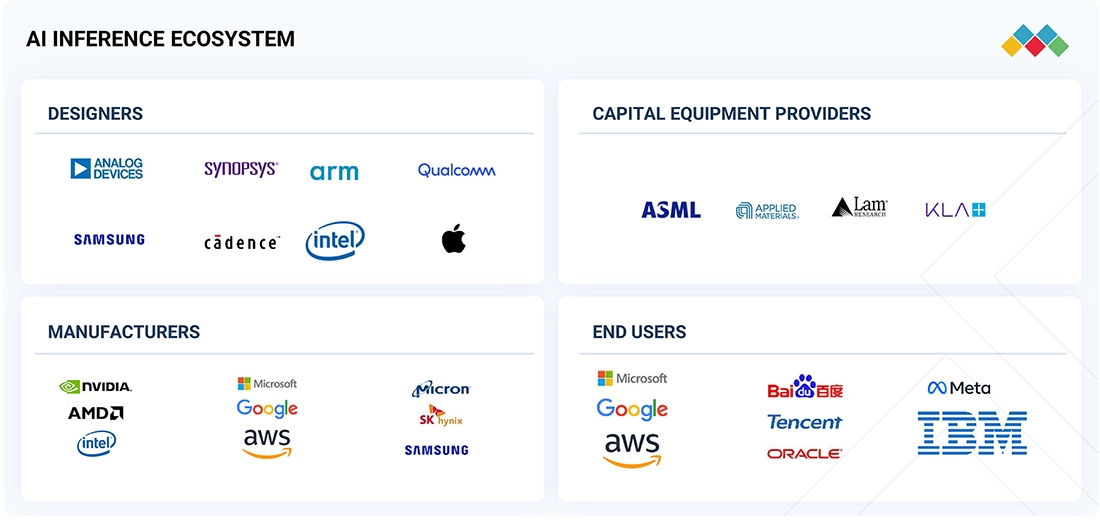

MARKET ECOSYSTEM

The AI inference market ecosystem includes designers, capital equipment providers, manufacturers, and end users. Each of these stakeholders works together to advance AI inference by sharing knowledge, resources, and expertise to foster innovation in this field. Manufacturers such as NVIDIA Corporation (US), Advanced Micro Devices, Inc. (US), and Intel Corporation (US) are central to the AI inference market. They are responsible for developing AI inference hardware for various applications.

Logos and trademarks shown above are the property of their respective owners. Their use here is for informational and illustrative purposes only.

MARKET SEGMENTS

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

AI Inference Market, By Compute

The AI inference market by compute includes GPU, CPU, FPGA, TPU, FSD, Inferentia, T-head, LPU, and other ASICs. The GPU segment is expected to dominate due to its superior parallel processing capability and extensive adoption across data centers for large model inference workloads. Additionally, increasing deployment of custom AI accelerators such as TPUs and domain-specific chips is driving performance optimization and energy efficiency across edge and cloud inference environments.

AI Inference Market, By Memory

This segment covers DDR and HBM memory technologies. HBM (high bandwidth memory) is gaining momentum, driven by the need for high-speed data transfer and energy efficiency in AI accelerators that handle large model inference. Additionally, the rising demand for memory bandwidth in transformer-based models and advancements in 3D-stacked DRAM are boosting adoption across next-generation inference processors.

AI Inference Market, By Network

The network segment covers NICs/network adapters, InfiniBand, Ethernet, and interconnects. NIC/network adapters are expected to grow at the highest rate, supported by rising demand for low-latency communication between AI servers and distributed inference nodes. The transition toward high-speed Ethernet (400G and beyond) and adoption of InfiniBand interconnects in hyperscale AI clusters are further enhancing inference throughput and scalability.

AI Inference Market, By Deployment

The AI inference market spans on-premises, cloud, and edge deployments. Cloud-based deployment holds the largest market share, driven by scalability, cost efficiency, and the rapid adoption of inference-as-a-service platforms by enterprises. Additionally, the edge deployment segment is experiencing significant growth due to the increasing demand for real-time inference in autonomous vehicles, industrial automation, and IoT devices.

AI Inference Market, By Application

The application segment encompasses generative AI, rule-based models, statistical models, deep learning, GANs, autoencoders, CNNs, transformer models, machine learning, NLP, and computer vision. Generative AI is anticipated to experience the highest compound annual growth rate (CAGR), driven by the increasing adoption of large language and diffusion models. The growing integration of artificial intelligence within enterprises for content creation, predictive analytics, and conversational agents is further amplifying demand across various sectors.

AI Inference Market, By End User

This segment encompasses consumers, cloud service providers (CSPs), enterprises, healthcare, BFSI, automotive, retail & e-commerce, media & entertainment, government, and additional sectors. Cloud service providers constitute the largest segment, attributable to extensive AI infrastructure deployments and inference workloads led by hyperscalers. Enterprises are progressively investing in on-premises and hybrid inference solutions to enhance latency management, data security, and operational efficacy.

REGION

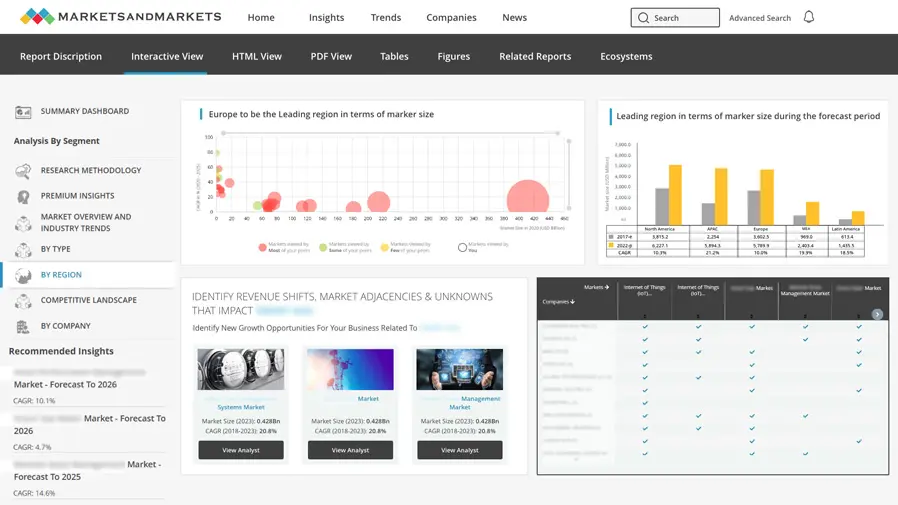

Asia Pacific to exhibit highest CAGR in global AI inference market during forecast period

Asia Pacific is projected to grow fastest, fueled by investments in sovereign AI initiatives, hyperscale data centers, and semiconductor ecosystem expansion across China, India, and Japan. Moreover, government-supported AI innovation programs and increasing cloud adoption among regional businesses are further driving market growth.

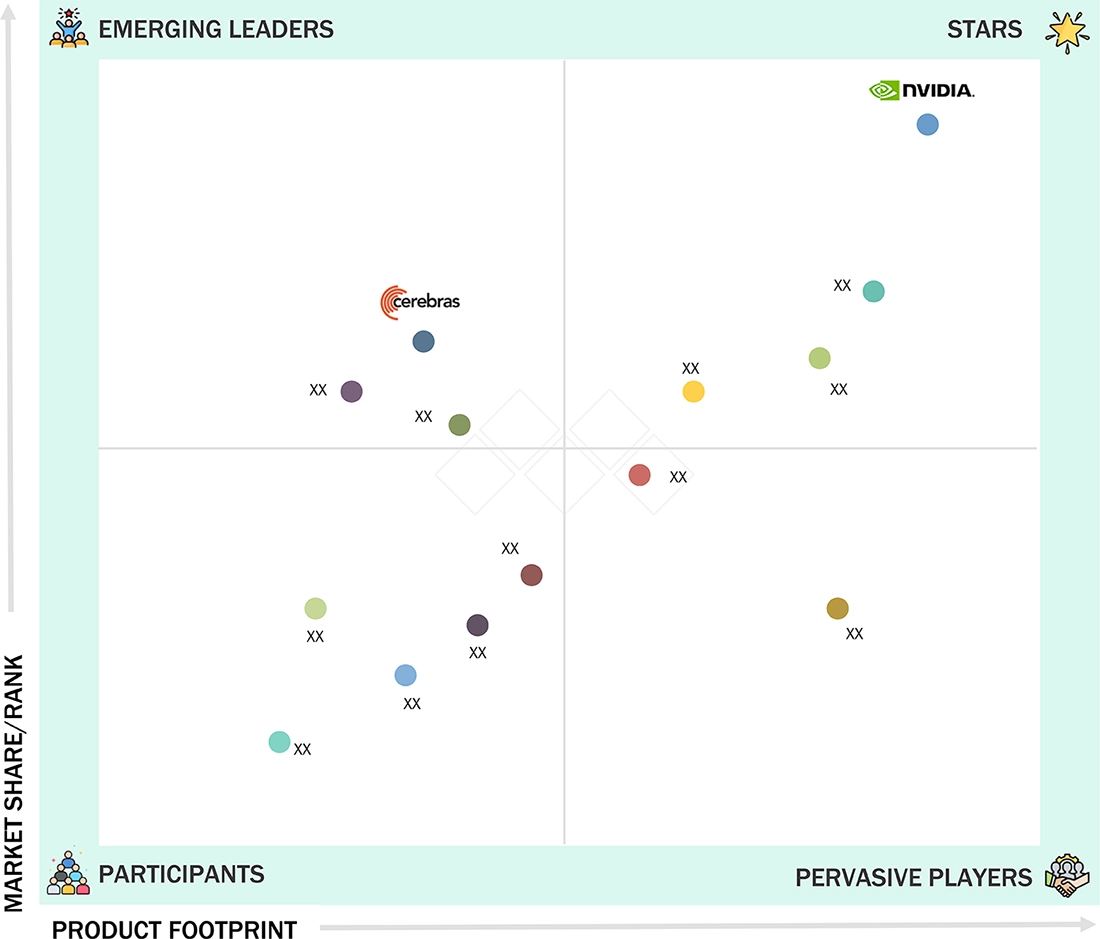

AI Inference Market: COMPANY EVALUATION MATRIX

In the AI inference market matrix, NVIDIA is positioned as a star because of its strong product presence and leading market share, showing its leadership in both innovation and adoption across OEMs. Cerebras, on the other hand, is seen as an emerging leader, holding a significant market share but with a smaller product footprint, which indicates strong growth potential as it expands its AI inference offerings.

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

KEY MARKET PLAYERS

MARKET SCOPE

| REPORT METRIC | DETAILS |

|---|---|

| Market Size in 2024 (Value) | USD 76.24 Billion |

| Market Forecast in 2030 (Value) | USD 254.98 Billion |

| Growth Rate | CAGR of 19.2% from 2025-2030 |

| Years Considered | 2021-2030 |

| Base Year | 2024 |

| Forecast Period | 2025-2030 |

| Units Considered | Value (USD Million/Billion), Volume (Thousand Units) |

| Report Coverage | Revenue forecast, company ranking, competitive landscape, growth factors, and trends |

| Segments Covered |

|

| Regional Scope | North America, Europe, Asia Pacific, RoW |

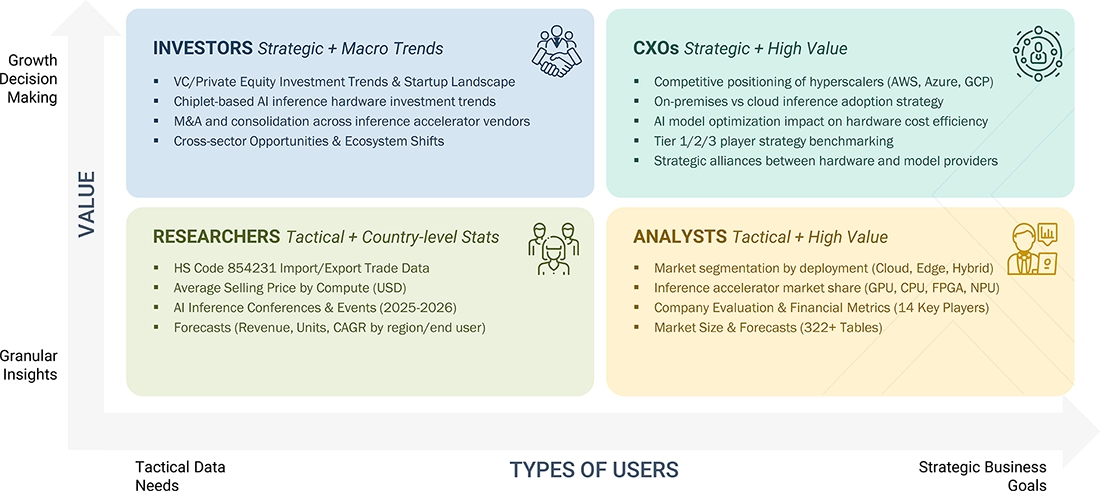

WHAT IS IN IT FOR YOU: AI Inference Market REPORT CONTENT GUIDE

DELIVERED CUSTOMIZATIONS

We have successfully delivered the following deep-dive customizations:

| CLIENT REQUEST | CUSTOMIZATION DELIVERED | VALUE ADDS |

|---|---|---|

| Cloud Infrastructure Provider | Competitive analysis of inference accelerator architectures (GPUs, TPUs, ASICs, FPGAs) with performance benchmarking, cost-per-inference metrics, and deployment scalability assessments |

|

| Edge AI Chipset Manufacturer | Regional market sizing for edge inference solutions across automotive, industrial IoT, smart devices, and retail sectors with device deployment forecasts and power-performance requirements |

|

| Enterprise AI Platform Vendor | Deep-dive into model optimization techniques (quantization, pruning, distillation), inference runtime frameworks, and deployment toolchains across cloud and edge environments |

|

| Telecommunications Operator | Country-specific analysis of network edge inference deployment models, latency requirements by use case, regulatory considerations, and competitive positioning strategies |

|

| AI Software Startup | Comprehensive supplier ecosystem mapping for inference hardware, software frameworks, model optimization tools, and integration partnerships with technology maturity assessment |

|

RECENT DEVELOPMENTS

- October 2024 : Advanced Micro Devices, Inc. (US) introduced the 5th Gen AMD EPYC processors for AI, cloud, and enterprise applications. They deliver maximum GPU acceleration, improved per-server performance, and enhanced AI inference capabilities. AMD EPYC 9005 processors offer dense and high-performance solutions for cloud workloads.

- October 2024 : Intel Corporation (US) and Inflection AI (US) teamed up to speed up AI adoption for businesses and developers by launching Inflection for Enterprise, an enterprise-grade AI platform. Powered by Intel Gaudi and Intel Tiber AI Cloud, this system provides customizable, scalable AI features, allowing companies to deploy AI co-workers trained on their specific data and policies.

- August 2024 : Cerebras announced Cerebras Inference, the fastest AI inference solution. It delivers 1,800 tokens per second for Llama3.1 8B and 450 tokens per second for Llama3.1 70B, outperforming GPU-based solutions by 20 times. It offers 100x better price performance while maintaining accuracy in the 16-bit domain.

- May 2025 : NinjaTech AI, a generative AI company, partnered with Amazon Web Services, Inc. to launch its new personal AI, Ninja, powered by AWS's Trainium and Inferentia2 chips. These chips enable fast, scalable, and sustainable AI agent training, helping users efficiently handle complex tasks like research and scheduling. NinjaTech AI reports up to 80% cost savings and 60% greater energy efficiency using AWS’s cloud capabilities.

- March 2024 : NVIDIA Corporation introduced the NVIDIA Blackwell platform to help organizations build and run real-time generative AI, featuring six innovative technologies for accelerated computing. It supports AI training and real-time LLM inference for models up to 10 trillion parameters.

Table of Contents

Methodology

The research process for this technical, market-oriented, and commercial study of the AI inference market included the systematic gathering, recording, and analysis of data about companies operating in the market. It involved the extensive use of secondary sources, directories, and databases (Factiva, Oanda, and OneSource) to identify and collect relevant information. In-depth interviews were conducted with various primary respondents, including experts from core and related industries and preferred manufacturers, to obtain and verify critical qualitative and quantitative information as well as to assess the growth prospects of the market. Key players in the AI inference market were identified through secondary research, and their market rankings were determined through primary and secondary research. This included studying annual reports of top players and interviewing key industry experts, such as CEOs, directors, and marketing executives.

Secondary Research

In the secondary research process, various secondary sources were used to identify and collect information for this study. These include annual reports, press releases, and investor presentations of companies, whitepapers, certified publications, and articles from recognized associations and government publishing sources. Research reports from a few consortiums and councils were also consulted to structure qualitative content. Secondary sources included corporate filings (such as annual reports, investor presentations, and financial statements); trade, business, and professional associations; white papers; Journals and certified publications; articles by recognized authors; gold-standard and silver-standard websites; directories; and databases. Data was also collected from secondary sources, such as the International Trade Centre (ITC), and the International Monetary Fund (IMF).

List of key secondary sources

|

Source |

Web Link |

|

European Association for Artificial Intelligence |

https://eurai.org/ |

|

Association for Machine Learning and Application (AMLA) |

https://www.icmla-conference.org/ |

|

Association for the Advancement of Artificial Intelligence |

https://aaai.org/ |

|

Generative AI Association (GENAIA) |

https://www.generativeaiassociation.org/ |

|

International Monetary Fund |

https://www.umaconferences.com/ |

|

Institute of Electrical and Electronics Engineers (IEEE) |

https://ieeexplore.ieee.org/ |

Primary Research

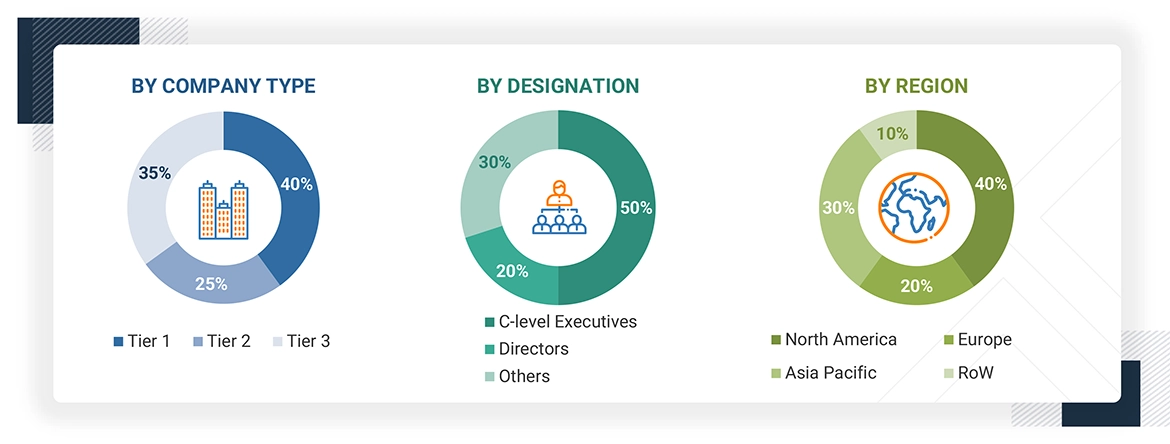

Extensive primary research was accomplished after understanding and analyzing the AI inference market scenario through secondary research. Several primary interviews were conducted with key opinion leaders from both demand- and supply-side vendors across four major regions—North America, Europe, Asia Pacific, and RoW. Approximately 30% of the primary interviews were conducted with the demand side, and 70% with the supply side. Primary data was collected through questionnaires, emails, and telephonic interviews. Various departments within organizations, such as sales, operations, and administration, were contacted to provide a holistic viewpoint in the report.

Note: Other designations include technology heads, media analysts, sales managers, marketing managers, and product managers.

The three tiers of the companies are based on their total revenues as of 2023 ? Tier 1: >USD 1 billion, Tier 2: USD 500 million–1 billion, and Tier 3: USD 500 million.

To know about the assumptions considered for the study, download the pdf brochure

Market Size Estimation

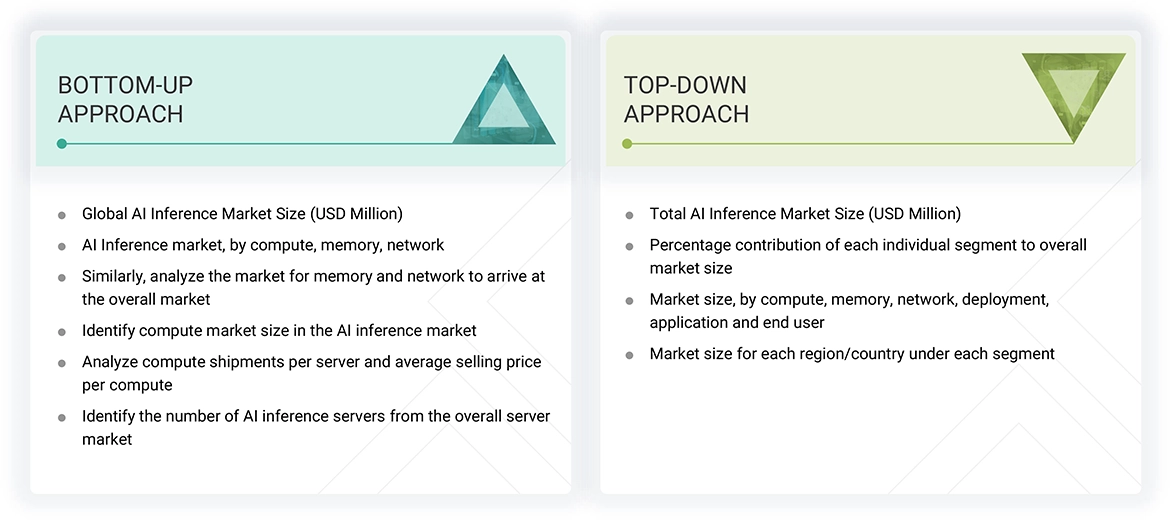

In the complete market engineering process, top-down and bottom-up approaches and several data triangulation methods have been used to perform the market size estimation and forecasting for the overall market segments and subsegments listed in this report. Extensive qualitative and quantitative analyses have been performed on the complete market engineering process to list the key information/insights throughout the report. The following table explains the process flow of the market size estimation.

The key players in the market were identified through secondary research, and their rankings in the respective regions determined through primary and secondary research. This entire procedure involved the study of the annual and financial reports of top players, and interviews with industry experts such as chief executive officers, vice presidents, directors, and marketing executives for quantitative and qualitative key insights. All percentage shares, splits, and breakdowns were determined using secondary sources and verified through primary sources. All parameters that affect the markets covered in this research study were accounted for, viewed in extensive detail, verified through primary research, and analyzed to obtain the final quantitative and qualitative data. This data was consolidated, supplemented with detailed inputs and analysis from MarketsandMarkets, and presented in this report.

AI Inference Market: Bottom-Up Approach

- Initially, the companies offering AI Inference were identified. Their products were mapped based on compute, memory, network, deployment, application and end user.

- After understanding the different types of AI Inference offereing by various manufacturers, the market was categorized into segments based on the data gathered through primary and secondary sources.

- To derive the global AI Inference market, global server shipments of top players for AI servers considered in the report's scope were tracked.

- A suitable penetration rate was assigned for compute, memory, network offerings to derive the shipments of AI Inference.

- We derived the AI Inference market based on different offerings using the average selling price (ASP) at which a particular company offers its devices. The ASP of each offering was identified based on secondary sources and validated from primaries.

- For the CAGR, the market trend analysis was carried out by understanding the industry penetration rate and the demand and supply of AI Inference offerings for different end users.

- The AI Inference market is also tracked through the data sanity method. The revenues of key providers were analyzed through annual reports and press releases and summed to derive the overall market.

- For each company, a percentage is assigned to its overall revenue or, in a few cases, segmental revenue to derive its revenue for the AI Inference. This percentage for each company is assigned based on its product portfolio and range of AI Inference offerings.

- The estimates at every level, by discussing them with key opinion leaders, including CXOs, directors, and operation managers, have been verified and cross-checked, and finally, with the domain experts at MarketsandMarkets.

- Various paid and unpaid sources of information, such as annual reports, press releases, white papers, and databases, have been studied.

AI Inference Market: Top-Down Approach

- The global market size of AI Inference was estimated through the data sanity of major companies.

- The growth of the AI Inference market witnessed an upward trend during the studied period, as it is currently in the initial stage of the product cycle, with major players beginning to expand their business into various application areas of the market.

- Types of AI Inference offerings, their features and properties, geographical presence, and key applications served by all players in the AI Inference market were studied to estimate and arrive at the percentage split of the segments.

- Different types of AI Inference offerings, such as compute, memory, and network and their penetration for end users were also studied.

- Based on secondary research, the market split for AI Inference by compute, memory, network, deployment, application and end user was estimated.

- The demand generated by companies operating in different end users segments was analyzed.

- Multiple discussions with key opinion leaders across major companies involved in developing the AI Inference offerings and related components were conducted to validate the market split of compute, memory, network, deployment, application and end user.

- The regional splits were estimated using secondary sources based on factors such as the number of players in a specific country and region and the adoption and use cases of each implementation type with respect to applications in the region.

AI Inference Market : Top-Down and Bottom-Up Approach

Data Triangulation

After arriving at the overall size of the AI inference market through the process explained above, the overall market has been split into several segments. Data triangulation procedures have been employed to complete the overall market engineering process and arrive at the exact statistics for all the segments, wherever applicable. The data has been triangulated by studying various factors and trends from both the demand and supply sides. The market has also been validated using both top-down and bottom-up approaches.

Market Definition

AI inference is the process of using a trained artificial intelligence (AI) model to make predictions, classify data, or extract insights from new, unseen input data. It involves applying a model to tasks like image recognition, language processing, or real-time analytics. Optimized for efficiency and speed, AI inference often runs on specialized hardware, enabling applications from autonomous systems to personalized recommendations. It encompasses a combination of high-performance computing resources (e.g., GPUs, CPUs, FPGAs, etc.), memory solutions (e.g., DDR, HBM), networking components (e.g., network adapters, interconnects) optimized for handling AI workloads. It is utilized in generative AI, machine learning, natural language processing (NLP), and computer vision applications.

Key Stakeholders

- Government and financial institutions and investment communities

- Analysts and strategic business planners

- Semiconductor product designers and fabricators

- Application providers

- AI solution providers

- AI platform providers

- AI system providers

- Manufacturers and AI technology users

- Business providers

- Component and device suppliers and distributors

- Professional service/solution providers

- Research organizations

- Technology standard organizations, forums, alliances, and associations

- Technology investors

- Investors (private equity firms, venture capitalists, and others)

Report Objectives

- To define, describe, segment, and forecast the size of the AI inference market, in terms of value, based on compute, memory, network, deployment, application, end user, and region

- To forecast the size of the market segments for four major regions—North America, Europe, Asia Pacific, and RoW

- To define, describe, segment, and forecast the size of the AI inference market, in terms of volume, based on compute.

- To give detailed information regarding drivers, restraints, opportunities, and challenges influencing the growth of the market

- To provide an value chain analysis, ecosystem analysis, case study analysis, patent analysis, Trade analysis, technology analysis, pricing analysis, key conferences and events, key stakeholders and buying criteria, Porter's five forces analysis, investment and funding scenario, and regulations pertaining to the market

- To provide a detailed overview of the value chain analysis of the AI inference ecosystem

- To strategically analyze micromarkets1 with regard to individual growth trends, prospects, and contributions to the total market

- To analyze opportunities for stakeholders by identifying high-growth segments of the market

- To strategically profile the key players, comprehensively analyze their market positions in terms of ranking and core competencies2, and provide a competitive market landscape.

- To analyze strategic approaches such as product launches, acquisitions, agreements, and partnerships in the AI inference market

Available Customizations

With the given market data, MarketsandMarkets offers customizations according to the company’s specific needs. The following customization options are available for the report:

Country-wise Information:

- Detailed analysis and profiling of additional market players (up to 7)

Key Questions Addressed by the Report

Need a Tailored Report?

Customize this report to your needs

Get 10% FREE Customization

Customize This ReportPersonalize This Research

- Triangulate with your Own Data

- Get Data as per your Format and Definition

- Gain a Deeper Dive on a Specific Application, Geography, Customer or Competitor

- Any level of Personalization

Let Us Help You

- What are the Known and Unknown Adjacencies Impacting the AI Inference Market

- What will your New Revenue Sources be?

- Who will be your Top Customer; what will make them switch?

- Defend your Market Share or Win Competitors

- Get a Scorecard for Target Partners

Custom Market Research Services

We Will Customise The Research For You, In Case The Report Listed Above Does Not Meet With Your Requirements

Get 10% Free Customisation

Growth opportunities and latent adjacency in AI Inference Market